Deploying an AWS ECS Cluster of EC2 Instances With Terraform

This project will utilize two major cloud computing tools.

Terraform is an infrastructure orchestration tool (also known as “infrastructure as code(IaC)”). Using Terraform, you declare every single piece of your infrastructure once, in static files, allowing you to deploy and destroy cloud infrastructure easily, make incremental changes to the infrastructure, do rollbacks, infrastructure versioning, etc.

Amazon created an innovative solution for deploying and managing a fleet of virtual machines — AWS ECS. Under the hood, ECS utilizes AWSs’ well-known concept of EC2 virtual machines, as well as CloudWatch for monitoring them, auto scaling groups (for provisioning and deprovisioning machines depending on the current load of the cluster), and most importantly — Docker as a containerization engine.

Here’s what’s to be done:

Within a VPC there’s an autoscaling group with EC2 instances. ECS manages starting tasks on those EC2 instances based on Docker images stored in ECR container registry. Each EC2 instance is a host for a worker that writes something to RDS MySQL. EC2 and MySQL instances are in different security groups.

We need to provision a some building blocks:

- a VPC with a public subnet as an isolated pool for our resources

- Internet Gateway to contact the outside world

- Security groups for RDS MySQL and for EC2s

- Auto-scaling group for ECS cluster with launch configuration

- RDS MySQL instance

- ECR container registry

- ECS cluster with task and service definition

The Terraform Part

To start with Terraform we need to install it. Just go along with the steps in this document: https://www.terraform.io/downloads.html

Verify the installation by typing:

$ terraform --version

Terraform v0.13.4With Terraform (in this case version 0.13.4) we can provision cloud architecture by writing code which is usually created in a programming language. In this case it’s going to be HCL — a HashiCorp configuration language.

Terraform state

Before writing the first line of our code lets focus on understanding what is the Terraform state.

The state is a kind of a snapshot of the architecture. Terraform needs to know what was provisioned, what are the resources that were created, track the changes, etc.

All that information is written either to a local file terraform.state or to a remote location. Generally the code is shared between members of a team, therefore keeping local state file is never a good idea. We want to keep the state in a remote destination. When working with AWS, this destination is s3.

This is the first thing that we need to code — tell terraform that the state location will be remote and kept is s3 (terraform.tf):

terraform {

backend "s3" {

bucket = "terraformeksproject"

key = "state.tfstate"

}

}Terraform will keep the state in an s3 bucket under a state.tfstate key. In order that to happen we need to set up three environment variables:

$ export AWS_SECRET_ACCESS_KEY=...

$ export AWS_ACCESS_KEY_ID=..

$ export AWS_DEFAULT_REGION=...These credentials can be found/created in AWS IAM Management Console in “My security credentials” section.Both access keys and regionmustbe stored in environment variables if we want to keep the remote state.

VPC

provider "aws" {}

resource "aws_vpc" "vpc" {

cidr_block = "10.0.0.0/24"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Name = "Terraform VPC"

}

}Terraform needs to know with which API should interact. Here we say it’ll be AWS. List of available providers can be found here: https://www.terraform.io/docs/providers/index.html

The provider section has no parameters because we’ve already provided the credentials needed to communicate with AWS API as environment variables in order have remote Terraform state (there is possibility to set it up withprovider parameters, though).

The resource block type aws_vpc with name vpc creates Virtual Private Cloud — a logically isolated virtual network. When creating VPC we must provide a range of IPv4 addresses. It’s the primary CIDR block for the VPC and this is the only required parameter.

Parameters enable_dns_support and enable_dns_hostnames arerequired if we want to provision database in our VPC that will be publicly accessible (and we do).

Internet gateway

In order to allow communication between instances in our VPC and the internet we need to create Internet gateway.

resource "aws_internet_gateway" "internet_gateway" {

vpc_id = aws_vpc.vpc.id

}The only required parameter is a previously created VPC id that can be obtain by invoking aws_vpc.vpc.id this is a terraform way to get to the resource details: resource.resource_name.resource_parameter.

Subnet

Within the VPC let’s add a public subnet:

resource "aws_subnet" "pub_subnet" {

vpc_id = aws_vpc.vpc.id

cidr_block = "10.1.0.0/22"

}To create a subnet we need to provide VPC id and CIDR block. Additionally we can specify availability zone, but it’s not required.

Route Table

Route table allows to set up rules that determine where network traffic from our subnets is directed. Let’s create new, custom one, just to show how it can be used and associated with subnets.

resource "aws_route_table" "public" {

vpc_id = aws_vpc.vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.internet_gateway.id

}

}

resource "aws_route_table_association" "route_table_association" {

subnet_id = aws_subnet.pub_subnet.id

route_table_id = aws_route_table.public.id

}What we did is created a route table for our VPC that directs all the traffic (0.0.0.0/0) to the internet gateway and associate this route table with both subnets. Each subnet in VPC have to be associated with a route table.

Security Groups

Security groups works like a firewalls for the instances (where ACL works like a global firewall for the VPC). Because we allow all the traffic from the internet to and from the VPC we might set some rules to secure the instances themselves.

We will have two instances in our VPC — cluster of EC2s and RDS MySQL, therefore we need to create two security groups.

resource "aws_security_group" "ecs_sg" {

vpc_id = aws_vpc.vpc.id

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_security_group" "rds_sg" {

vpc_id = aws_vpc.vpc.id

ingress {

protocol = "tcp"

from_port = 3306

to_port = 3306

cidr_blocks = ["0.0.0.0/0"]

security_groups = [aws_security_group.ecs_sg.id]

}

egress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}First security group is for the EC2 that will live in ECS cluster. Inbound traffic is narrowed to two ports: 22 for SSH and 443 for HTTPS needed to download the docker image from ECR.

Second security group is for the RDS that opens just one port, the default port for MySQL — 3306. Inbound traffic is also allowed from ECS security group, which means that the application that will live on EC2 in the cluster will have permission to use MySQL.

Inbound traffic is allowed for any traffic from the Internet (CIDR block 0.0.0.0/0). In real life case there should be limitations, for example, to IP ranges for a specific VPN.

This ends setting up the networking park of our architecture. Now it’s time for autoscaling group for a EC2 instances in ECS cluster.

Autoscaling Group

Autoscaling group is a collection of EC2 instances. The number of those instances is determined by scaling policies. We will create autoscaling group using a launch template.

Before we will launch container instancesandregister them into a cluster, we have to create an IAM role for those instances to use when they are launched:

data "aws_iam_policy_document" "ecs_agent" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["ec2.amazonaws.com"]

}

}

}

resource "aws_iam_role" "ecs_agent" {

name = "ecs-agent"

assume_role_policy = data.aws_iam_policy_document.ecs_agent.json

}

resource "aws_iam_role_policy_attachment" "ecs_agent" {

role = "aws_iam_role.ecs_agent.name"

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEC2ContainerServiceforEC2Role"

}

resource "aws_iam_instance_profile" "ecs_agent" {

name = "ecs-agent"

role = aws_iam_role.ecs_agent.name

}Having IAM role we can create an autoscaling group from template:

resource "aws_launch_configuration" "ecs_launch_config" {

image_id = "ami-094d4d00fd7462815"

iam_instance_profile = aws_iam_instance_profile.ecs_agent.name

security_groups = [aws_security_group.ecs_sg.id]

user_data = "#!/bin/bash\necho ECS_CLUSTER=my-cluster >> /etc/ecs/ecs.config"

instance_type = "t2.micro"

}

resource "aws_autoscaling_group" "failure_analysis_ecs_asg" {

name = "asg"

vpc_zone_identifier = [aws_subnet.pub_subnet.id]

launch_configuration = aws_launch_configuration.ecs_launch_config.name

desired_capacity = 2

min_size = 1

max_size = 10

health_check_grace_period = 300

health_check_type = "EC2"

}If we want to use a created, named ECS cluster we have to put that information into user_data, otherwise our instances will be launched in default cluster.

Basic scaling information is described by aws_autoscaling_group parameters. Autoscaling policy has to be provided, we will do it later.

Having autoscaling group set up we are ready to launch our instances and database.

Database Instance

Having prepared subnet and security group for RDS we need one more thing to cover before launching the database instance. To provision a database we need to follow some rules:

- Our VPC has to have enabled DNS hostnames and DNS resolution (we did that while creating VPC).

- Our VPC has to have a DB subnet group (that is about to happen).

- Our VPC has to have a security group that allows access to the DB instance.

Let’s create the missing piece:

resource "aws_db_subnet_group" "db_subnet_group" {

subnet_ids = [aws_subnet.pub_subnet.id]

}And database instance itself:

resource "aws_db_instance" "mysql" {

identifier = "mysql"

allocated_storage = 5

backup_retention_period = 2

backup_window = "01:00-01:30"

maintenance_window = "sun:03:00-sun:03:30"

multi_az = true

engine = "mysql"

engine_version = "5.7"

instance_class = "db.t2.micro"

name = "worker_db"

username = "worker"

password = "worker"

port = "3306"

db_subnet_group_name = aws_db_subnet_group.db_subnet_group.id

vpc_security_group_ids = [aws_security_group.rds_sg.id, aws_security_group.ecs_sg.id]

skip_final_snapshot = true

final_snapshot_identifier = "worker-final"

publicly_accessible = true

}All the parameters are more less self explanatory. If we want our database to be publicly accessible you have to set the publicly_accessible parameter as true.

Elastic Container Service

ECS is a scalable container orchestration service that allows to run and scale dockerized applications on AWS.

To launch such an application we need to download image from some repository. For that we will use ECR. We can push images there and use them while launching EC2 instances within our cluster:

resource "aws_ecr_repository" "worker" {

name = "worker"

}And the ECS itself:

resource "aws_ecs_cluster" "ecs_cluster" {

name = "my-cluster"

}Cluster name is important here, as we used it previously while defining launch configuration. This is where newly created EC2 instances will live.

To launch a dockerized application we need to create a task — a set of simple instructions understood by ECS cluster. The task is a JSON definition that can be kept in a separate file:

[

{

"essential": true,

"memory": 512,

"name": "worker",

"cpu": 2,

"image": "${REPOSITORY_URL}:latest",

"environment": []

}

]In a JSON file we define what image will be used using template variable provided in a template_file data resource as repository_url tagged with latest. 512 MB of RAM and 2 CPU units that is enough to run the application on EC2.

Having this prepared we can create terraform resource for the task definition:

resource "aws_ecs_task_definition" "task_definition" {

family = "worker"

container_definitions = data.template_file.task_definition_template.rendered

}The family parameter is required and it represents the unique name of our task definition.

The last thing that will bind the cluster with the task is a ECS service. The service will guarantee that we always have some number of tasks running all the time:

resource "aws_ecs_service" "worker" {

name = "worker"

cluster = aws_ecs_cluster.ecs_cluster.id

task_definition = aws_ecs_task_definition.task_definition.arn

desired_count = 2

}This ends the terraform description of an architecture.

There’s just one more thing left to code. We need to output the provisioned components in order to use them in worker application.

We need to know URLs for:

- ECR repository

- MySQL host

Terraform provides output block for that. We can print to the console any parameter of any provisioned component.

output "mysql_endpoint" {

value = aws_db_instance.mysql.endpoint

}

output "ecr_repository_worker_endpoint" {

value = aws_ecr_repository.worker.repository_url

}Applying the changes

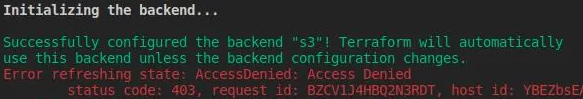

First we need to initialize a working directory that contains Terraform files by typing terraform init. This command will install needed plugins and provide a code validation.

Follow up with terraform plan.

Finding that you’re receiving an error?

You need to manually create theS3bucket through the aws console, making sure to edit terraform.tf with the correct bucket name.

If everything is fine we can run terraform apply to finally provision the desired infastructure.